How to Update data in SQL Server Destination Table from Excel Source using SSIS Incremental Load

Incremental Load is process of comparing the Target data table against the Source Data based on a Id Column or Date Stamp or Time Stamp and updating or loading the data to Target table as per below conditions:

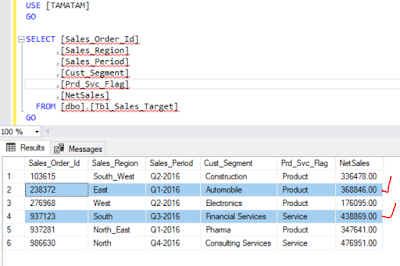

Suppose we have a Destination/Target table which we need to update with Source data:

Data Flow Task Design:

Now we have to Design the above Data Flow task to perform the Incremental Load as follows :

Phase-2 : Connecting to the Excel Source:

First add the Data Flow Task to Control Flow

Phase-3 : Add the Lookup Transformation and set the properties:

General Tab :

Choose Options for General Tab in Lookup Transformation Editor as follows.

Make sure to choose "Ignore Failure" to handle rows with not matching entries.

Update_Existing : C_Order_Id == LKP_Sales_Order_Id && C_NetSales != LKP_NetSales

This condition checks whether the Sales Order exist in both the tables, and also checks whether there is any change/adjustment in the measure NetSales.

--------------------------------------------------------------------------------------------------------

Thanks, TAMATAM ; Business Intelligence & Analytics Professional

--------------------------------------------------------------------------------------------------------

Incremental Load is process of comparing the Target data table against the Source Data based on a Id Column or Date Stamp or Time Stamp and updating or loading the data to Target table as per below conditions:

- If there are any New records in Source data then we have to insert those records in Target data table.

For example, on a Daily/Weekly basis we have to insert the Region wise sales data. - If there are any updated values in Source data then we have to update those records Target data table.

For example, updating the Net Sales data which is adjusted.

Suppose we have a Destination/Target table which we need to update with Source data:

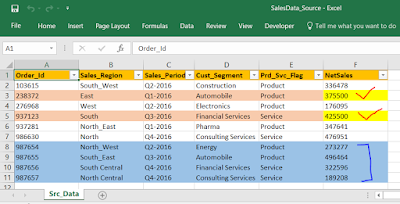

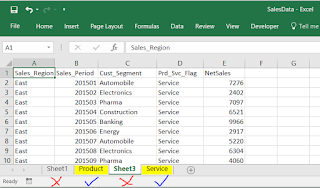

The Source data as follows :

Now if you observes the data in the Source against the Destination Table :

Incremental Load activities to perform here :- First 6 records already exist in the Destination but the NetSales for records 2 and 4 ( Sales_Order_Id : 238372, 937123 ) are modified in Source which need to only Update in Destination Table.

- The Records 7,8,9,10 in the Source are not exist in the Destination which need to Insert in Destination Table.

- The records which does not have any change should not update to Destination, but you can route them to a Flat File if required.

Data Flow Task Design:

Now we have to Design the above Data Flow task to perform the Incremental Load as follows :

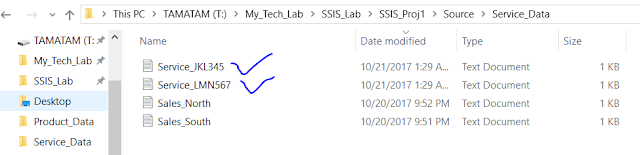

Phase-1 : Create the Connections to the SQL Destination , Excel Source Data and a Flat File :

here , db_TAMATAM : The SQL Connection to the Destination table "Tbl_Sales_Target"

SalesData_Source : The Excel Connection to the Source data file "SalesData_Source.xlsx"

NoUpdateData : The Flat File Connection to the File where we store the data which does not load to the SQL Destination, as they are already exist, and no updates in that.

First add the Data Flow Task to Control Flow

Next add the Excel Source in side the data flow and Convert the Source data as per Destination Table

Phase-3 : Add the Lookup Transformation and set the properties:

General Tab :

Choose Options for General Tab in Lookup Transformation Editor as follows.

Make sure to choose "Ignore Failure" to handle rows with not matching entries.

Connection Tab :

Write an SQL Query to Select your desired Columns from Destination table which we further use for the Lookup by Mapping with Source Columns

Columns Tab :

Here we are mapping the Lookup Column "Sales_Order_Id"(from Destination) with C_Order_Id(from Source ).

The other column "NetSales" is not using for Lookup, but I selected that to use further in Conditional Split in next step.

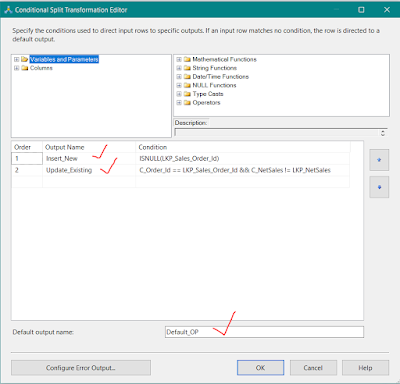

Phase-4 : Add the Condition Split Transformation and set the properties:

Conditions:

Insert_New : ISNULL(LKP_Sales_Order_Id)

This condition checks whether LKP_Sales_Order_Id is NULL or not. If its true then those new records will "Insert" into the Destination table.

Note :

From the above step Lookup Transformation will returns null when there is no matching "C_Order_Id" in the Source, when comparing with Destination column "Sales_Order_Id".

Update_Existing : C_Order_Id == LKP_Sales_Order_Id && C_NetSales != LKP_NetSales

If it is true then those modified records will "Update" into Destination Table.

Default_OP : This is the default output which does not met any the above to conditions.

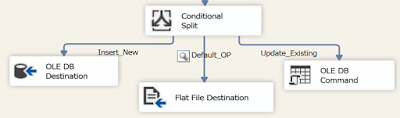

Phase-5 : Connect the Conditional Split Data to Destinations:

From the above Conditional Split Transformation, data will be transferred to the Destinations,based on condtions as follows :

OLE DB Destination: The records which are new in the Source will be Inserted to the Destination Table "Tbl_Sales_Target".

OLE DB Command : The records which have the modified "NetSales" in the Source will be Updated to the Destination Table "Tbl_Sales_Target", defined in the OLE DB Transforation with Parameter mapping.

OLE DB Command Transformation :

We have to set the OLE DB Command Transformation properties as follows :

Connection Managers Tab :

Select the DB Connection

Component Properties Tab :

Write an Update statement to Update the Destination table columns with Source columns based on Parameter(?) mapping done in next step.

UPDATE [dbo].[Tbl_Sales_Target]

SET [Sales_Order_Id]=?

,[Sales_Region]=?

,[Sales_Period]=?

,[Cust_Segment]=?

,[Prd_Svc_Flag]=?

,[NetSales]=?

WHERE [Sales_Order_Id]=?

SET [Sales_Order_Id]=?

,[Sales_Region]=?

,[Sales_Period]=?

,[Cust_Segment]=?

,[Prd_Svc_Flag]=?

,[NetSales]=?

WHERE [Sales_Order_Id]=?

Column Mappings Tab :

Map the Input Source Columns with Parameters created in above Step.

Now the OLE DB Transformation is ready to use.

Flat File Destination : The records which are Neither New Nor Modified will be moved to this Flat File.

Final Phase : Execute the Package

---------------------------------------------------------------------------------------------------------------

Final Output :

Before Update :

After Update :

--------------------------------------------------------------------------------------------------------

Thanks, TAMATAM ; Business Intelligence & Analytics Professional

--------------------------------------------------------------------------------------------------------